Page 44 -

P. 44

21 Examples of Bias and Variance

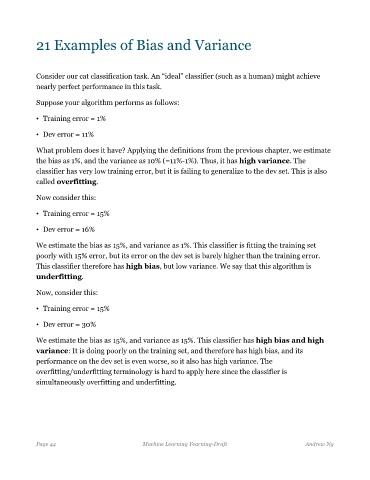

Consider our cat classification task. An “ideal” classifier (such as a human) might achieve

nearly perfect performance in this task.

Suppose your algorithm performs as follows:

• Training error = 1%

• Dev error = 11%

What problem does it have? Applying the definitions from the previous chapter, we estimate

the bias as 1%, and the variance as 10% (=11%-1%). Thus, it has high variance. The

classifier has very low training error, but it is failing to generalize to the dev set. This is also

called overfitting.

Now consider this:

• Training error = 15%

• Dev error = 16%

We estimate the bias as 15%, and variance as 1%. This classifier is fitting the training set

poorly with 15% error, but its error on the dev set is barely higher than the training error.

This classifier therefore has high bias, but low variance. We say that this algorithm is

underfitting.

Now, consider this:

• Training error = 15%

• Dev error = 30%

We estimate the bias as 15%, and variance as 15%. This classifier has high bias and high

variance: It is doing poorly on the training set, and therefore has high bias, and its

performance on the dev set is even worse, so it also has high variance. The

overfitting/underfitting terminology is hard to apply here since the classifier is

simultaneously overfitting and underfitting.

Page 44 Machine Learning Yearning-Draft Andrew Ng