Page 252 - Mechatronics for Safety, Security and Dependability in a New Era

P. 252

Ch48-I044963.fm Page 236 Tuesday, August 1, 2006 4:04 PM

Ch48-I044963.fm

236

236 Page 236 Tuesday, August 1, 2006 4:04 PM

Observed image Filtered image Reduced image State Action

State

Action

Observed imageFiltered image Reduced image

Io If Ic Ic s s

Mapping matrix

Mapping matrix

averaging W s1 Filtered Reduced Substates

Reduced

Filtered

Substates

s2 images images

images

images

filtering reduction . a

.

filter F .

sm

sigmoid function If1 Ic1 W1

nx x ny nx x ny ncx x ncy g(x) Observed s1

image F1

Io

image feature extraction

Action

state vector extraction

image feature extraction state vector extraction State Action

State

(a) Imagefeature generation model s1

(a) Image feature generation model

F2 If2 Ic2 W2 s2

s2 sE = . ~ a a

.

episode 1

end .

U2 sn

Ur episode NE . . .

. . .

. . .

Fn

Ifn Icn Wn

U1 sn

start State space

State space

(b) Segmentation of supervised data

(b) Segmentation of supervised data (c) Image feature selection model

(c) Image feature selection model

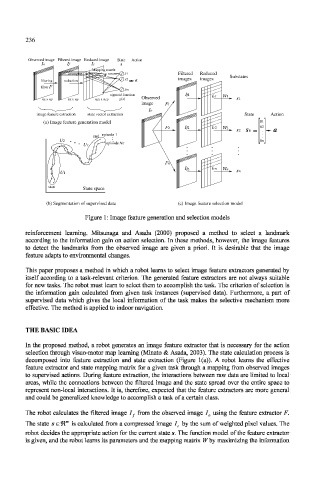

Figure 1: Image feature generation and selection models

reinforcement learning. Mitsunaga and Asada (2000) proposed a method to select a landmark

according to the information gain on action selection. In these methods, however, the image features

to detect the landmarks from the observed image are given a priori. Tt is desirable that the image

feature adapts to environmental changes.

This paper proposes a method in which a robot learns to select image feature extractors generated by

itself according to a task-relevant criterion. The generated feature extractors are not always suitable

for new tasks. The robot must learn to select them to accomplish the task. The criterion of selection is

the information gain calculated from given task instances (supervised data). Furthermore, a part of

supervised data which gives the local information of the task makes the selective mechanism more

effective. The method is applied to indoor navigation.

THE BASIC IDEA

In the proposed method, a robot generates an image feature extractor that is necessary for the action

selection through visuo-motor map learning (Minato & Asada, 2003). The state calculation process is

decomposed into feature extraction and state extraction (Figure l(a)). A robot learns the effective

feature extractor and state mapping matrix for a given task through a mapping from observed images

to supervised actions. During feature extraction, the interactions between raw data are limited to local

areas, while the connections between the filtered image and the state spread over the entire space to

represent non-local interactions. It is, therefore, expected that the feature extractors are more general

and could be generalized knowledge to accomplish a task of a certain class.

The robot calculates the filtered image I f from the observed image /, using the feature extractor F.

The state s e 91"' is calculated from a compressed image I c by the sum of weighted pixel values. The

robot decides the appropriate action for the current state s. The function model of the feature extractor

is given, and the robot learns its parameters and the mapping matrix W by maximizing the information