Page 179 - A First Course In Stochastic Models

P. 179

172 CONTINUOUS-TIME MARKOV CHAINS

6l 5l 4l 3l 2l l

6 5 4 3 2 1 crash

m m

l

2l

h

sleep 2 sleep 1

h

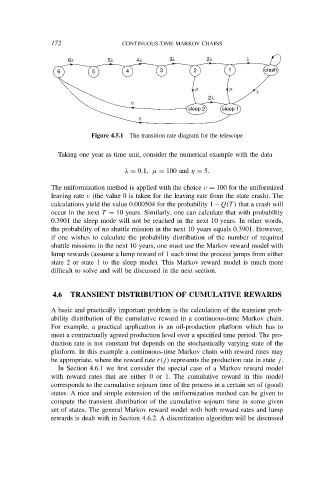

Figure 4.5.1 The transition rate diagram for the telescope

Taking one year as time unit, consider the numerical example with the data

λ = 0.1, µ = 100 and η = 5.

The uniformization method is applied with the choice ν = 100 for the uniformized

leaving rate ν (the value 0 is taken for the leaving rate from the state crash). The

calculations yield the value 0.000504 for the probability 1−Q(T ) that a crash will

occur in the next T = 10 years. Similarly, one can calculate that with probability

0.3901 the sleep mode will not be reached in the next 10 years. In other words,

the probability of no shuttle mission in the next 10 years equals 0.3901. However,

if one wishes to calculate the probability distribution of the number of required

shuttle missions in the next 10 years, one must use the Markov reward model with

lump rewards (assume a lump reward of 1 each time the process jumps from either

state 2 or state 1 to the sleep mode). This Markov reward model is much more

difficult to solve and will be discussed in the next section.

4.6 TRANSIENT DISTRIBUTION OF CUMULATIVE REWARDS

A basic and practically important problem is the calculation of the transient prob-

ability distribution of the cumulative reward in a continuous-time Markov chain.

For example, a practical application is an oil-production platform which has to

meet a contractually agreed production level over a specified time period. The pro-

duction rate is not constant but depends on the stochastically varying state of the

platform. In this example a continuous-time Markov chain with reward rates may

be appropriate, where the reward rate r(j) represents the production rate in state j.

In Section 4.6.1 we first consider the special case of a Markov reward model

with reward rates that are either 0 or 1. The cumulative reward in this model

corresponds to the cumulative sojourn time of the process in a certain set of (good)

states. A nice and simple extension of the uniformization method can be given to

compute the transient distribution of the cumulative sojourn time in some given

set of states. The general Markov reward model with both reward rates and lump

rewards is dealt with in Section 4.6.2. A discretization algorithm will be discussed