Page 60 - Big Data Analytics for Intelligent Healthcare Management

P. 60

52 CHAPTER 3 BIG DATA ANALYTICS IN HEALTHCARE: A CRITICAL ANALYSIS

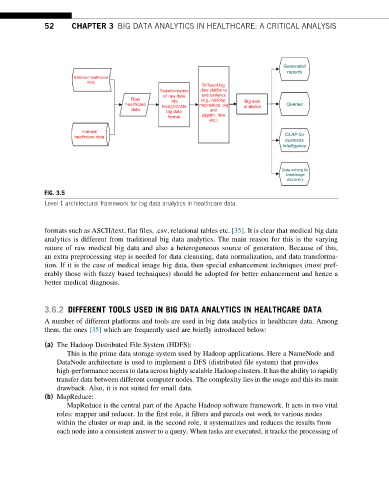

Generated

reports

External healthcare

data

Different big

Transformation data platforms

of raw data and analytics

Raw into (e.g., hadoop, Big data

healthcare recognizable mapreduce, pig analytics Queries

data and

big data

format piglatin, hive

etc.)

Internal

healthcare data OLAP for

business

intelligence

Data mining for

knowledge

discovery

FIG. 3.5

Level 1 architectural framework for big data analytics in healthcare data.

formats such as ASCII/text, flat files, .csv, relational tables etc. [35]. It is clear that medical big data

analytics is different from traditional big data analytics. The main reason for this is the varying

nature of raw medical big data and also a heterogeneous source of generation. Because of this,

an extra preprocessing step is needed for data cleansing, data normalization, and data transforma-

tion. If it is the case of medical image big data, then special enhancement techniques (most pref-

erably those with fuzzy based techniques) should be adopted for better enhancement and hence a

better medical diagnosis.

3.6.2 DIFFERENT TOOLS USED IN BIG DATA ANALYTICS IN HEALTHCARE DATA

A number of different platforms and tools are used in big data analytics in healthcare data. Among

them, the ones [35] which are frequently used are briefly introduced below:

(a) The Hadoop Distributed File System (HDFS):

This is the prime data storage system used by Hadoop applications. Here a NameNode and

DataNode architecture is used to implement a DFS (distributed file system) that provides

high-performance access to data across highly scalable Hadoop clusters. It has the ability to rapidly

transfer data between different computer nodes. The complexity lies in the usage and this its main

drawback. Also, it is not suited for small data.

(b) MapReduce:

MapReduce is the central part of the Apache Hadoop software framework. It acts in two vital

roles: mapper and reducer. In the first role, it filters and parcels out work to various nodes

within the cluster or map and, in the second role, it systematizes and reduces the results from

each node into a consistent answer to a query. When tasks are executed, it tracks the processing of