Page 20 -

P. 20

8 Establish a single-number evaluation metric

for your team to optimize

Classification accuracy is an example of a single-number evaluation metric: You run

your classifier on the dev set (or test set), and get back a single number about what fraction

of examples it classified correctly. According to this metric, if classifier A obtains 97%

accuracy, and classifier B obtains 90% accuracy, then we judge classifier A to be superior.

3

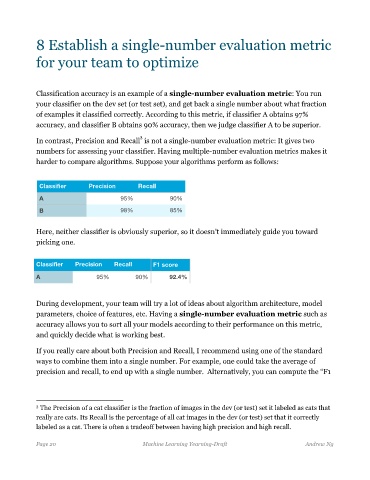

In contrast, Precision and Recall is not a single-number evaluation metric: It gives two

numbers for assessing your classifier. Having multiple-number evaluation metrics makes it

harder to compare algorithms. Suppose your algorithms perform as follows:

Classifier Precision Recall

A 95% 90%

B 98% 85%

Here, neither classifier is obviously superior, so it doesn’t immediately guide you toward

picking one.

Classifier Precision Recall F1 score

A 95% 90% 92.4%

During development, your team will try a lot of ideas about algorithm architecture, model

parameters, choice of features, etc. Having a single-number evaluation metric such as

accuracy allows you to sort all your models according to their performance on this metric,

and quickly decide what is working best.

If you really care about both Precision and Recall, I recommend using one of the standard

ways to combine them into a single number. For example, one could take the average of

precision and recall, to end up with a single number. Alternatively, you can compute the “F1

3 The Precision of a cat classifier is the fraction of images in the dev (or test) set it labeled as cats that

really are cats. Its Recall is the percentage of all cat images in the dev (or test) set that it correctly

labeled as a cat. There is often a tradeoff between having high precision and high recall.

Page 20 Machine Learning Yearning-Draft Andrew Ng