Page 96 -

P. 96

78 3 Data Mining

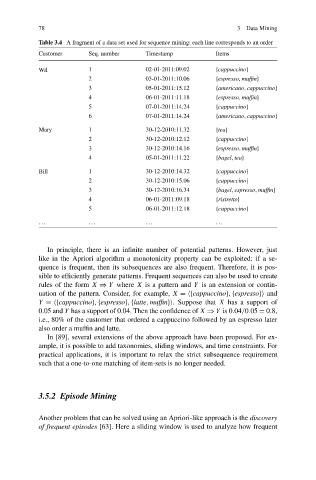

Table 3.4 A fragment of a data set used for sequence mining: each line corresponds to an order

Customer Seq. number Timestamp Items

Wil 1 02-01-2011:09.02 {cappuccino}

2 03-01-2011:10.06 {espresso,muffin}

3 05-01-2011:15.12 {americano,cappuccino}

4 06-01-2011:11.18 {espresso,muffin}

5 07-01-2011:14.24 {cappuccino}

6 07-01-2011:14.24 {americano,cappuccino}

Mary 1 30-12-2010:11.32 {tea}

2 30-12-2010:12.12 {cappuccino}

3 30-12-2010:14.16 {espresso,muffin}

4 05-01-2011:11.22 {bagel,tea}

Bill 1 30-12-2010:14.32 {cappuccino}

2 30-12-2010:15.06 {cappuccino}

3 30-12-2010:16.34 {bagel,espresso,muffin}

4 06-01-2011:09.18 {ristretto}

5 06-01-2011:12.18 {cappuccino}

... ... ... ...

In principle, there is an infinite number of potential patterns. However, just

like in the Apriori algorithm a monotonicity property can be exploited: if a se-

quence is frequent, then its subsequences are also frequent. Therefore, it is pos-

sible to efficiently generate patterns. Frequent sequences can also be used to create

rules of the form X ⇒ Y where X is a pattern and Y is an extension or contin-

uation of the pattern. Consider, for example, X = {cappuccino},{espresso}

and

Y = {cappuccino},{espresso},{latte,muffin}

. Suppose that X has a support of

0.05 and Y has a support of 0.04. Then the confidence of X ⇒ Y is 0.04/0.05 = 0.8,

i.e., 80% of the customer that ordered a cappuccino followed by an espresso later

also order a muffin and latte.

In [89], several extensions of the above approach have been proposed. For ex-

ample, it is possible to add taxonomies, sliding windows, and time constraints. For

practical applications, it is important to relax the strict subsequence requirement

such that a one-to-one matching of item-sets is no longer needed.

3.5.2 Episode Mining

Another problem that can be solved using an Apriori-like approach is the discovery

of frequent episodes [63]. Here a sliding window is used to analyze how frequent