Page 153 - Sensing, Intelligence, Motion : How Robots and Humans Move in an Unstructured World

P. 153

128 MOTION PLANNING FOR A MOBILE ROBOT

T

T

r u

r u

S S

(a) (b)

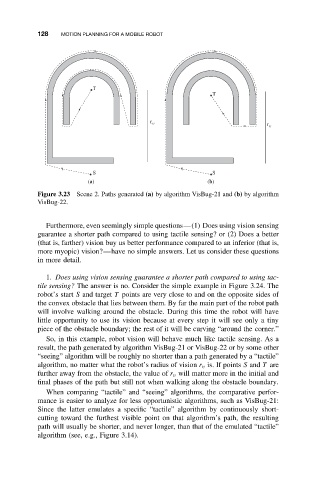

Figure 3.23 Scene 2. Paths generated (a) by algorithm VisBug-21 and (b) by algorithm

VisBug-22.

Furthermore, even seemingly simple questions—(1) Does using vision sensing

guarantee a shorter path compared to using tactile sensing? or (2) Does a better

(that is, farther) vision buy us better performance compared to an inferior (that is,

more myopic) vision?—have no simple answers. Let us consider these questions

in more detail.

1. Does using vision sensing guarantee a shorter path compared to using tac-

tile sensing? The answer is no. Consider the simple example in Figure 3.24. The

robot’s start S and target T points are very close to and on the opposite sides of

the convex obstacle that lies between them. By far the main part of the robot path

will involve walking around the obstacle. During this time the robot will have

little opportunity to use its vision because at every step it will see only a tiny

piece of the obstacle boundary; the rest of it will be curving “around the corner.”

So, in this example, robot vision will behave much like tactile sensing. As a

result, the path generated by algorithm VisBug-21 or VisBug-22 or by some other

“seeing” algorithm will be roughly no shorter than a path generated by a “tactile”

algorithm, no matter what the robot’s radius of vision r v is. If points S and T are

further away from the obstacle, the value of r v will matter more in the initial and

final phases of the path but still not when walking along the obstacle boundary.

When comparing “tactile” and “seeing” algorithms, the comparative perfor-

mance is easier to analyze for less opportunistic algorithms, such as VisBug-21:

Since the latter emulates a specific “tactile” algorithm by continuously short-

cutting toward the furthest visible point on that algorithm’s path, the resulting

path will usually be shorter, and never longer, than that of the emulated “tactile”

algorithm (see, e.g., Figure 3.14).