Page 185 -

P. 185

170 BARBER AND GRASER

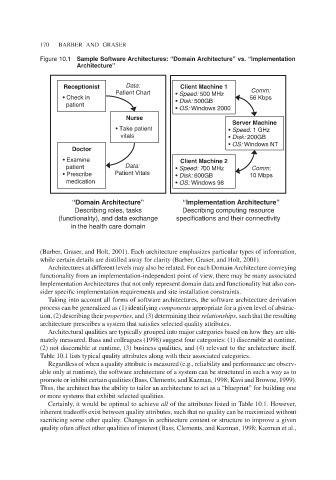

Figure 10.1 Sample Software Architectures: “Domain Architecture” vs. “Implementation

Architecture”

Receptionist Data: Client Machine 1

Patient Chart • Speed: 500 MHz Comm:

• Check in 56 Kbps

patient • Disk: 500GB

• OS: Windows 2000

Nurse

Server Machine

• Take patient • Speed: 1 GHz

vitals • Disk: 200GB

• OS: Windows NT

Doctor

• Examine Client Machine 2

patient Data: • Speed: 700 MHz Comm:

• Prescribe Patient Vitals • Disk: 600GB 10 Mbps

medication • OS: Windows 98

“Domain Architecture” “Implementation Architecture”

Describing roles, tasks Describing computing resource

(functionality), and data exchange specifications and their connectivity

in the health care domain

(Barber, Graser, and Holt, 2001). Each architecture emphasizes particular types of information,

while certain details are distilled away for clarity (Barber, Graser, and Holt, 2001).

Architectures at different levels may also be related. For each Domain Architecture conveying

functionality from an implementation-independent point of view, there may be many associated

Implementation Architectures that not only represent domain data and functionality but also con-

sider specific implementation requirements and site installation constraints.

Taking into account all forms of software architectures, the software architecture derivation

process can be generalized as (1) identifying components appropriate for a given level of abstrac-

tion, (2) describing their properties, and (3) determining their relationships, such that the resulting

architecture prescribes a system that satisfies selected quality attributes.

Architectural qualities are typically grouped into major categories based on how they are ulti-

mately measured. Bass and colleagues (1998) suggest four categories: (1) discernible at runtime,

(2) not discernible at runtime, (3) business qualities, and (4) relevant to the architecture itself.

Table 10.1 lists typical quality attributes along with their associated categories.

Regardless of when a quality attribute is measured (e.g., reliability and performance are observ-

able only at runtime), the software architecture of a system can be structured in such a way as to

promote or inhibit certain qualities (Bass, Clements, and Kazman, 1998; Kavi and Browne, 1999).

Thus, the architect has the ability to tailor an architecture to act as a “blueprint” for building one

or more systems that exhibit selected qualities.

Certainly, it would be optimal to achieve all of the attributes listed in Table 10.1. However,

inherent tradeoffs exist between quality attributes, such that no quality can be maximized without

sacrificing some other quality. Changes in architecture content or structure to improve a given

quality often affect other qualities of interest (Bass, Clements, and Kazman, 1998; Kazman et al.,