Page 233 - Artificial Intelligence in the Age of Neural Networks and Brain Computing

P. 233

224 CHAPTER 11 Deep Learning Approaches to Electrophysiological

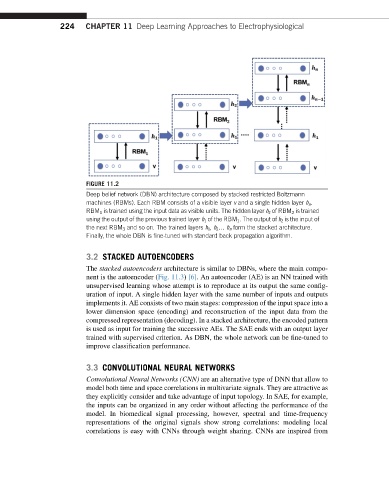

FIGURE 11.2

Deep belief network (DBN) architecture composed by stacked restricted Boltzmann

machines (RBMs). Each RBM consists of a visible layer v and a single hidden layer h n .

RBM 1 is trained using the input data as visible units. The hidden layer h 2 of RBM 2 is trained

using the output of the previous trained layer h 1 of the RBM 1 . The output of h 2 is the input of

the next RBM 3 and so on. The trained layers h 1 , h 2 . h n form the stacked architecture.

Finally, the whole DBN is fine-tuned with standard back propagation algorithm.

3.2 STACKED AUTOENCODERS

The stacked autoencoders architecture is similar to DBNs, where the main compo-

nent is the autoencoder (Fig. 11.3) [6]. An autoencoder (AE) is an NN trained with

unsupervised learning whose attempt is to reproduce at its output the same config-

uration of input. A single hidden layer with the same number of inputs and outputs

implements it. AE consists of two main stages: compression of the input space into a

lower dimension space (encoding) and reconstruction of the input data from the

compressed representation (decoding). In a stacked architecture, the encoded pattern

is used as input for training the successive AEs. The SAE ends with an output layer

trained with supervised criterion. As DBN, the whole network can be fine-tuned to

improve classification performance.

3.3 CONVOLUTIONAL NEURAL NETWORKS

Convolutional Neural Networks (CNN) are an alternative type of DNN that allow to

model both time and space correlations in multivariate signals. They are attractive as

they explicitly consider and take advantage of input topology. In SAE, for example,

the inputs can be organized in any order without affecting the performance of the

model. In biomedical signal processing, however, spectral and time-frequency

representations of the original signals show strong correlations: modeling local

correlations is easy with CNNs through weight sharing. CNNs are inspired from