Page 35 - Classification Parameter Estimation & State Estimation An Engg Approach Using MATLAB

P. 35

24 DETECTION AND CLASSIFICATION

classification, but fully in terms of the prior probabilities and the condi-

tional probability densities:

^ ! ! MAP ðzÞ¼ argmaxfpðzj!ÞPð!Þg ð2:12Þ

!2O

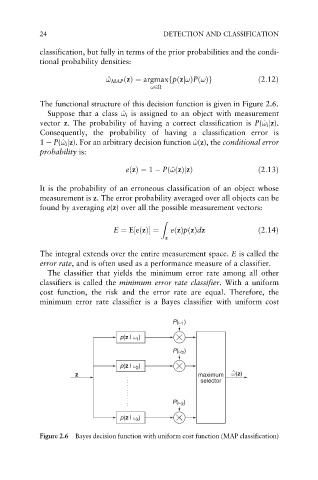

The functional structure of this decision function is given in Figure 2.6.

Suppose that a class ^ ! i is assigned to an object with measurement

!

!

vector z. The probability of having a correct classification is P(^ ! i jz).

Consequently, the probability of having a classification error is

1 P(^ ! i jz). For an arbitrary decision function ^ !(z), the conditional error

!

!

probability is:

!

eðzÞ¼ 1 Pð^ !ðzÞjzÞ ð2:13Þ

It is the probability of an erroneous classification of an object whose

measurement is z. The error probability averaged over all objects can be

found by averaging e(z) over all the possible measurement vectors:

Z

E ¼ E½eðzÞ ¼ eðzÞpðzÞdz ð2:14Þ

z

The integral extends over the entire measurement space. E is called the

error rate, and is often used as a performance measure of a classifier.

The classifier that yields the minimum error rate among all other

classifiers is called the minimum error rate classifier. With a uniform

cost function, the risk and the error rate are equal. Therefore, the

minimum error rate classifier is a Bayes classifier with uniform cost

P(ω )

1

p(z | ω )

1

P(ω )

2

)

p(z | ω 2

∧

z maximum ω (z)

selector

P(ω )

k

)

p(z | ω k

Figure 2.6 Bayes decision function with uniform cost function (MAP classification)