Page 140 - Dynamic Vision for Perception and Control of Motion

P. 140

124 5 Extraction of Visual Features

terpretation process. So, the amount of image data to be touched is reduced drasti-

cally, while background knowledge on the object/subject class allows asking spe-

cific questions in image interpretation with correspondingly tuned feature extrac-

tors. Continuity conditions over time play an important role in state estimation

from image data. In a complex scene, many of these questions have to be answered

in parallel during each video cycle. This can be achieved by time slicing attention

of a single processor/software combination or by operating with several or many

processors (maybe with special software packages) in parallel. Increasing comput-

ing power available per processor will shift the solution to the former layout. Over

the last decade, the number of processors in the vision systems of UniBwM has

been reduced by almost an order of magnitude (from 46 to 6) while at the same

time the performance level increased considerably. The knowledge bases for rec-

ognizing single objects/subjects and their motion over time will be treated in Chap-

ters 6 and 12.

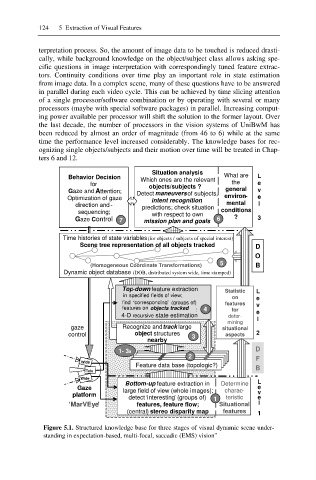

Situation analysis

Behavior Decision Which ones are the relevant What are L

for objects/subjects ? the e

Gaze and Attention; Detect maneuversof subjects, general v

Optimization of gaze intent recognition , environ- e

direction and - predictions; check situation mental l

sequencing; with respect to own conditions

Gaze Control 7 mission plan and goals . 6 ? 3

Time histories of state variables(for objects / subjects of special interest)

Scene tree representation of all objects tracked D

O

(Homogeneous Coordinate Transformations) 5 B

Dynamic object database (DOB, distributed system wide, time stamped)

Top-down feature extraction Statistic L

in specified fields of view;

on e

find ‘corresponding’ (groups of) features v

features on objects tracked ; 4 for

4-D recursive state estimation deter- e

mining l

gaze Recognize and track large situational

control object structures 3 aspects 2

nearby

D

1- 3a

2

Wide F

Feature data base (topologic?)

Tele B

Wide

Bottom-up feature extraction in Determine L

Gaze large field of view (whole images); charac- e

platform detect ‘interesting’ (groups of) 1 teristic v

e

MarVEye’

‘ ‘MarVEye’ features, feature flow; Situational l

(central) stereo disparity map features 1

Figure 5.1. Structured knowledge base for three stages of visual dynamic scene under-

standing in expectation-based, multi-focal, saccadic (EMS) vision”