Page 144 - Introduction to Autonomous Mobile Robots

P. 144

Perception

objects contour 129

(x, y, z)

lens l origin lens r

y

x

f z

(x , y ) (x , y )

r

r

l

l

focal plane

b/2 b/2

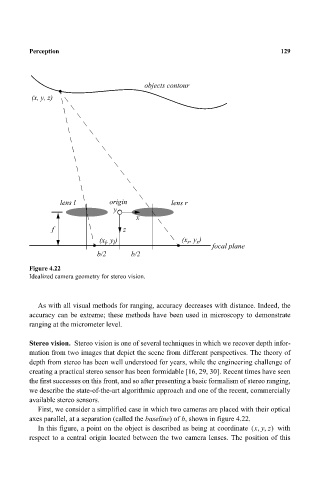

Figure 4.22

Idealized camera geometry for stereo vision.

As with all visual methods for ranging, accuracy decreases with distance. Indeed, the

accuracy can be extreme; these methods have been used in microscopy to demonstrate

ranging at the micrometer level.

Stereo vision. Stereo vision is one of several techniques in which we recover depth infor-

mation from two images that depict the scene from different perspectives. The theory of

depth from stereo has been well understood for years, while the engineering challenge of

creating a practical stereo sensor has been formidable [16, 29, 30]. Recent times have seen

the first successes on this front, and so after presenting a basic formalism of stereo ranging,

we describe the state-of-the-art algorithmic approach and one of the recent, commercially

available stereo sensors.

First, we consider a simplified case in which two cameras are placed with their optical

axes parallel, at a separation (called the baseline) of b, shown in figure 4.22.

In this figure, a point on the object is described as being at coordinate xy z,,( ) with

respect to a central origin located between the two camera lenses. The position of this