Page 21 - Probability and Statistical Inference

P. 21

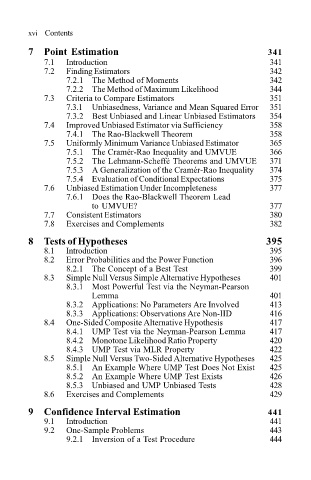

xvi Contents

7 Point Estimation 341

7.1 Introduction 341

7.2 Finding Estimators 342

7.2.1 The Method of Moments 342

7.2.2 The Method of Maximum Likelihood 344

7.3 Criteria to Compare Estimators 351

7.3.1 Unbiasedness, Variance and Mean Squared Error 351

7.3.2 Best Unbiased and Linear Unbiased Estimators 354

7.4 Improved Unbiased Estimator via Sufficiency 358

7.4.1 The Rao-Blackwell Theorem 358

7.5 Uniformly Minimum Variance Unbiased Estimator 365

7.5.1 The Cramér-Rao Inequality and UMVUE 366

7.5.2 The Lehmann-Scheffé Theorems and UMVUE 371

7.5.3 A Generalization of the Cramér-Rao Inequality 374

7.5.4 Evaluation of Conditional Expectations 375

7.6 Unbiased Estimation Under Incompleteness 377

7.6.1 Does the Rao-Blackwell Theorem Lead

to UMVUE? 377

7.7 Consistent Estimators 380

7.8 Exercises and Complements 382

8 Tests of Hypotheses 395

8.1 Introduction 395

8.2 Error Probabilities and the Power Function 396

8.2.1 The Concept of a Best Test 399

8.3 Simple Null Versus Simple Alternative Hypotheses 401

8.3.1 Most Powerful Test via the Neyman-Pearson

Lemma 401

8.3.2 Applications: No Parameters Are Involved 413

8.3.3 Applications: Observations Are Non-IID 416

8.4 One-Sided Composite Alternative Hypothesis 417

8.4.1 UMP Test via the Neyman-Pearson Lemma 417

8.4.2 Monotone Likelihood Ratio Property 420

8.4.3 UMP Test via MLR Property 422

8.5 Simple Null Versus Two-Sided Alternative Hypotheses 425

8.5.1 An Example Where UMP Test Does Not Exist 425

8.5.2 An Example Where UMP Test Exists 426

8.5.3 Unbiased and UMP Unbiased Tests 428

8.6 Exercises and Complements 429

9 Confidence Interval Estimation 441

9.1 Introduction 441

9.2 One-Sample Problems 443

9.2.1 Inversion of a Test Procedure 444