Page 81 - Socially Intelligent Agents Creating Relationships with Computers and Robots

P. 81

64 Socially Intelligent Agents

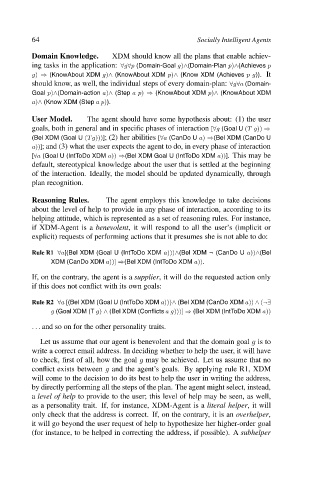

Domain Knowledge. XDM should know all the plans that enable achiev-

ing tasks in the application: ∀g∀p (Domain-Goal g)∧(Domain-Plan p)∧(Achieves p

g) ⇒ (KnowAbout XDM g)∧ (KnowAbout XDM p)∧ (Know XDM (Achieves pg)).It

should know, as well, the individual steps of every domain-plan: ∀g∀a (Domain-

Goal p)∧(Domain-action a)∧ (Step ap) ⇒ (KnowAbout XDM p)∧ (KnowAbout XDM

a)∧ (Know XDM (Step ap)).

User Model. The agent should have some hypothesis about: (1) the user

goals, both in general and in specific phases of interaction [∀g (Goal U (Tg)) ⇒

(Bel XDM (Goal U (Tg)))]; (2) her abilities [∀a (CanDo U a) ⇒(Bel XDM (CanDo U

a))]; and (3) what the user expects the agent to do, in every phase of interaction

[∀a (Goal U (IntToDo XDM a)) ⇒(Bel XDM Goal U (IntToDo XDM a))]. This may be

default, stereotypical knowledge about the user that is settled at the beginning

of the interaction. Ideally, the model should be updated dynamically, through

plan recognition.

Reasoning Rules. The agent employs this knowledge to take decisions

about the level of help to provide in any phase of interaction, according to its

helping attitude, which is represented as a set of reasoning rules. For instance,

if XDM-Agent is a benevolent, it will respond to all the user’s (implicit or

explicit) requests of performing actions that it presumes she is not able to do:

Rule R1 ∀a[(Bel XDM (Goal U (IntToDo XDM a)))∧(Bel XDM ¬ (CanDo U a))∧(Bel

XDM (CanDo XDM a))] ⇒(Bel XDM (IntToDo XDM a)).

If, on the contrary, the agent is a supplier, it will do the requested action only

if this does not conflict with its own goals:

Rule R2 ∀a [(Bel XDM (Goal U (IntToDo XDM a)))∧ (Bel XDM (CanDo XDM a)) ∧ (¬P

g (Goal XDM (T g) ∧ (Bel XDM (Conflicts ag)))] ⇒ (Bel XDM (IntToDo XDM a))

... and so on for the other personality traits.

Let us assume that our agent is benevolent and that the domain goal g is to

write a correct email address. In deciding whether to help the user, it will have

to check, first of all, how the goal g may be achieved. Let us assume that no

conflict exists between g and the agent’s goals. By applying rule R1, XDM

will come to the decision to do its best to help the user in writing the address,

by directly performing all the steps of the plan. The agent might select, instead,

a level of help to provide to the user; this level of help may be seen, as well,

as a personality trait. If, for instance, XDM-Agent is a literal helper, it will

only check that the address is correct. If, on the contrary, it is an overhelper,

it will go beyond the user request of help to hypothesize her higher-order goal

(for instance, to be helped in correcting the address, if possible). A subhelper