Page 276 - Applied statistics and probability for engineers

P. 276

254 Chapter 7/Point Estimation of Parameters and Sampling Distributions

7-3.5 Mean Squared Error of an Estimator

Sometimes it is necessary to use a biased estimator. In such cases, the mean squared error of

∧

the estimator can be important. The mean squared error of an estimator Q is the expected

∧

squared difference between Q and θ.

Mean Squared Error

of an Estimator ∧

The mean squared error of an estimator Q of the parameter θ is deined as

∧ ∧ 2 (7-8)

MSE( ) = E( Q− θ)

Q

The mean squared error can be rewritten as follows:

∧ ∧ ∧ ∧

MSE( ) = E[ Q− E( Q)] 2 + θ − E[ ( Q)] 2

Q

∧

Q +(

= V( ) bias) 2

∧

That is, the mean squared error of Q is equal to the variance of the estimator plus the

∧

∧

squared bias. If Q is an unbiased estimator of θ, the mean squared error of Q is equal to

∧

the variance of Q. ∧ ∧

The mean squared error is an important criterion for comparing two estimators. Let Q1 and Q2

∧

∧

be two estimators of the parameter θ, and let MSE (Q1) and MSE (Q2) be the mean squared errors

∧

∧

∧

∧

of Q1 and Q2. Then the relative eficiency of Q2 to Q1 is deined as

∧

MSE(Q1 )

∧ (7-9)

MSE(Q1 )

∧

If this relative eficiency is less than 1, we would conclude that Q1 is a more eficient estimator

∧

of θ than Q2 in the sense that it has a smaller mean squared error.

Sometimes we ind that biased estimators are preferable to unbiased estimators because they

have smaller mean squared error. That is, we may be able to reduce the variance of the estimator

considerably by introducing a relatively small amount of bias. As long as the reduction in vari-

ance is larger than the squared bias, an improved estimator from a mean squared error viewpoint

∧

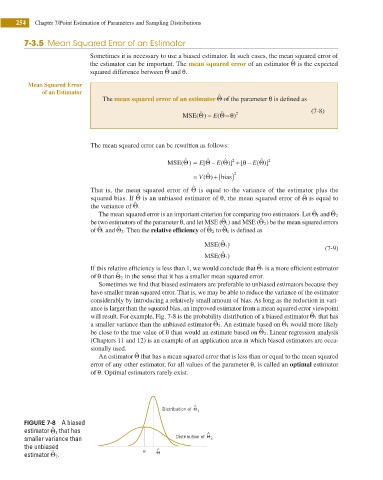

will result. For example, Fig. 7-8 is the probability distribution of a biased estimator Q1 that has

∧

∧

a smaller variance than the unbiased estimator Q2. An estimate based on Q1 would more likely

∧

be close to the true value of θ than would an estimate based on Q2. Linear regression analysis

(Chapters 11 and 12) is an example of an application area in which biased estimators are occa-

sionally used. ∧

An estimator Q that has a mean squared error that is less than or equal to the mean squared

error of any other estimator, for all values of the parameter θ, is called an optimal estimator

of θ. Optimal estimators rarely exist.

^

Distribution of 1

Q

FIGURE 7-8 A biased

∧

estimator Q1 that has ^

Q

smaller variance than Distribution of 2

the unbiased ^

∧

estimator Q2. u Q