Page 171 - Classification Parameter Estimation & State Estimation An Engg Approach Using MATLAB

P. 171

160 SUPERVISED LEARNING

edit the training set such that the (expensive) -NNR can be replaced

with the 1-NNR. The strategy is to remove those samples from the

training set that when used in the 1-NNR would cause erroneous results.

An algorithm that accomplishes this is the so-called multi-edit algorithm.

The following algorithm is from Devijver and Kittler.

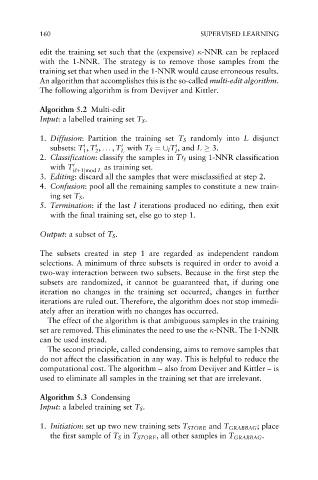

Algorithm 5.2 Multi-edit

Input: a labelled training set T S .

1. Diffusion: Partition the training set T S randomly into L disjunct

subsets: T , T , .. . , T with T S ¼[ l T , and L 3.

0

0

0

0

1

L

2

l

2. Classification: classify the samples in T0 ‘ using 1-NNR classification

with T 0 as training set.

(‘þ1)mod L

3. Editing: discard all the samples that were misclassified at step 2.

4. Confusion: pool all the remaining samples to constitute a new train-

ing set T S .

5. Termination: if the last I iterations produced no editing, then exit

with the final training set, else go to step 1.

Output: a subset of T S .

The subsets created in step 1 are regarded as independent random

selections. A minimum of three subsets is required in order to avoid a

two-way interaction between two subsets. Because in the first step the

subsets are randomized, it cannot be guaranteed that, if during one

iteration no changes in the training set occurred, changes in further

iterations are ruled out. Therefore, the algorithm does not stop immedi-

ately after an iteration with no changes has occurred.

The effect of the algorithm is that ambiguous samples in the training

set are removed. This eliminates the need to use the -NNR. The 1-NNR

can be used instead.

The second principle, called condensing, aims to remove samples that

do not affect the classification in any way. This is helpful to reduce the

computational cost. The algorithm – also from Devijver and Kittler – is

used to eliminate all samples in the training set that are irrelevant.

Algorithm 5.3 Condensing

Input: a labeled training set T S .

1. Initiation: set up two new training sets T STORE and T GRABBAG ; place

the first sample of T S in T STORE , all other samples in T GRABBAG .