Page 213 - Designing Sociable Robots

P. 213

breazeal-79017 book March 18, 2002 14:16

194 Chapter 11

11.3 Implementation Overview

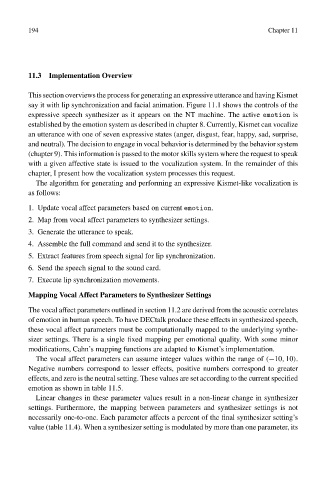

This section overviews the process for generating an expressive utterance and having Kismet

say it with lip synchronization and facial animation. Figure 11.1 shows the controls of the

expressive speech synthesizer as it appears on the NT machine. The active emotion is

established by the emotion system as described in chapter 8. Currently, Kismet can vocalize

an utterance with one of seven expressive states (anger, disgust, fear, happy, sad, surprise,

and neutral). The decision to engage in vocal behavior is determined by the behavior system

(chapter 9). This information is passed to the motor skills system where the request to speak

with a given affective state is issued to the vocalization system. In the remainder of this

chapter, I present how the vocalization system processes this request.

The algorithm for generating and performing an expressive Kismet-like vocalization is

as follows:

1. Update vocal affect parameters based on current emotion.

2. Map from vocal affect parameters to synthesizer settings.

3. Generate the utterance to speak.

4. Assemble the full command and send it to the synthesizer.

5. Extract features from speech signal for lip synchronization.

6. Send the speech signal to the sound card.

7. Execute lip synchronization movements.

Mapping Vocal Affect Parameters to Synthesizer Settings

The vocal affect parameters outlined in section 11.2 are derived from the acoustic correlates

of emotion in human speech. To have DECtalk produce these effects in synthesized speech,

these vocal affect parameters must be computationally mapped to the underlying synthe-

sizer settings. There is a single fixed mapping per emotional quality. With some minor

modifications, Cahn’s mapping functions are adapted to Kismet’s implementation.

The vocal affect parameters can assume integer values within the range of (−10, 10).

Negative numbers correspond to lesser effects, positive numbers correspond to greater

effects, and zero is the neutral setting. These values are set according to the current specified

emotion as shown in table 11.5.

Linear changes in these parameter values result in a non-linear change in synthesizer

settings. Furthermore, the mapping between parameters and synthesizer settings is not

necessarily one-to-one. Each parameter affects a percent of the final synthesizer setting’s

value (table 11.4). When a synthesizer setting is modulated by more than one parameter, its