Page 29 - Human Inspired Dexterity in Robotic Manipulation

P. 29

Digital Hand: Interface Between the Robot Hand and Human Hand 25

and Technology, where whole-body digital human research is also con-

ducted. So far, the commercially available digital hand is from the Iowa

University. Also, hand-dimension data of the Japanese is also available [23].

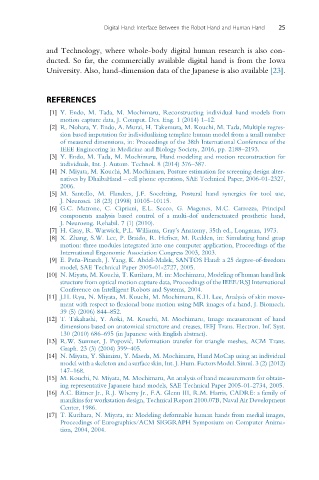

REFERENCES

[1] Y. Endo, M. Tada, M. Mochimaru, Reconstructing individual hand models from

motion capture data, J. Comput. Des. Eng. 1 (2014) 1–12.

[2] R. Nohara, Y. Endo, A. Murai, H. Takemura, M. Kouchi, M. Tada, Multiple regres-

sion based imputation for individualizing template human model from a small number

of measured dimensions, in: Proceedings of the 38th International Conference of the

IEEE Engineering in Medicine and Biology Society, 2016, pp. 2188–2193.

[3] Y. Endo, M. Tada, M. Mochimaru, Hand modeling and motion reconstruction for

individuals, Int. J. Autom. Technol. 8 (2014) 376–387.

[4] N. Miyata, M. Kouchi, M. Mochimaru, Posture estimation for screening design alter-

natives by DhaibaHand – cell phone operation, SAE Technical Paper, 2006-01-2327,

2006.

[5] M. Santello, M. Flanders, J.F. Soechting, Postural hand synergies for tool use,

J. Neurosci. 18 (23) (1998) 10105–10115.

[6] G.C. Matrone, C. Cipriani, E.L. Secco, G. Magenes, M.C. Carrozza, Principal

components analysis based control of a multi-dof underactuated prosthetic hand,

J. Neuroeng. Rehabil. 7 (1) (2010).

[7] H. Gray, R. Warwick, P.L. Williams, Gray’s Anatomy, 35th ed., Longman, 1973.

[8] X. Zhang, S.W. Lee, P. Braido, R. Hefner, M. Redden, in: Simulating hand grasp

motion: three modules integrated into one computer application, Proceedings of the

International Ergonomic Association Congress 2003, 2003.

[9] E. Pen ˜a-Pitarch, J. Yang, K. Abdel-Malek, SANTOS Hand: a 25 degree-of-freedom

model, SAE Technical Paper 2005-01-2727, 2005.

[10] N. Miyata, M. Kouchi, T. Kurihara, M. in: Mochimaru, Modeling of human hand link

structure from optical motion capture data, Proceedings of the IEEE/RSJ International

Conference on Intelligent Robots and Systems, 2004.

[11] J.H. Ryu, N. Miyata, M. Kouchi, M. Mochimaru, K.H. Lee, Analysis of skin move-

ment with respect to flexional bone motion using MR images of a hand, J. Biomech.

39 (5) (2006) 844–852.

[12] T. Takahashi, Y. Aoki, M. Kouchi, M. Mochimaru, Image measurement of hand

dimensions based on anatomical structure and creases, IEEJ Trans. Electron. Inf. Syst.

130 (2010) 686–695 (in Japanese with English abstract).

[13] R.W. Sumner, J. Popovi c, Deformation transfer for triangle meshes, ACM Trans.

Graph. 23 (3) (2004) 399–405.

[14] N. Miyata, Y. Shimizu, Y. Maeda, M. Mochimaru, Hand MoCap using an individual

model with a skeleton and a surface skin, Int. J. Hum. Factors Model. Simul. 3 (2) (2012)

147–168.

[15] M. Kouchi, N. Miyata, M. Mochimaru, An analysis of hand measurements for obtain-

ing representative Japanese hand models, SAE Technical Paper 2005-01-2734, 2005.

[16] A.C. Bittner Jr., R.J. Wherry Jr., F.A. Glenn III, R.M. Harris, CADRE: a family of

manikins for workstation design, Technical Report 2100.07B, Naval Air Development

Center, 1986.

[17] T. Kurihara, N. Miyata, in: Modeling deformable human hands from medial images,

Proceedings of Eurographics/ACM SIGGRAPH Symposium on Computer Anima-

tion, 2004, 2004.