Page 66 - Rapid Learning in Robotics

P. 66

52 The PSOM Algorithm

as conjugate gradient decent. It involves a line minimization procedure for

each gradient direction and optimizes successive search directions. The

line minimization usually probes E s s at three points to find the

one-dimensional sub-space minimum on the parabola. The idea behind

the conjugate gradient method is to accumulate information on successive

steps in order to lead to the exact minimum for quadratic cost functions

(Fletcher-Reeves and Polak-Ribiere, as well as other advanced variable met-

ric minimization schemes, e.g. the Fletcher-Powell algorithm; Stoer and Bu-

lirsch 1980; Press et al. 1988; Hertz et al. 1991).

The much more direct way is to use a second order method. In con-

trast to the feed-forward networks, the PSOM networks does allow easy

computation of the second derivatives of the basis function H a s (shown

later in this chapter).

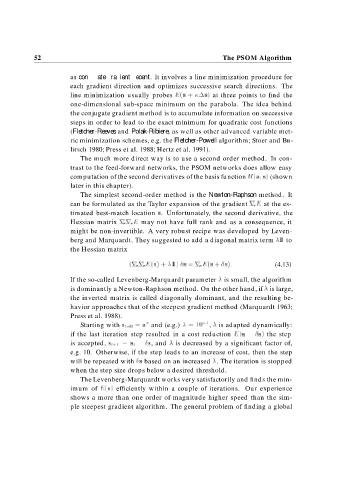

The simplest second-order method is the Newton-Raphson method. It

can be formulated as the Taylor expansion of the gradient r s E at the es-

timated best-match location s. Unfortunately, the second derivative, the

Hessian matrix r s r s E may not have full rank and as a consequence, it

might be non-invertible. A very robust recipe was developed by Leven-

berg and Marquardt. They suggested to add a diagonal matrix term to

the Hessian matrix

r s r s E s s r s E s s (4.13)

If the so-called Levenberg-Marquardt parameter is small, the algorithm

is dominantly a Newton-Raphson method. On the other hand, if is large,

the inverted matrix is called diagonally dominant, and the resulting be-

havior approaches that of the steepest gradient method (Marquardt 1963;

Press et al. 1988).

Starting with s t a and (e.g.) , is adapted dynamically:

if the last iteration step resulted in a cost reduction E s t s the step

is accepted, s t s t s, and is decreased by a significant factor of,

e.g. 10. Otherwise, if the step leads to an increase of cost, then the step

will be repeated with s based on an increased . The iteration is stopped

when the step size drops below a desired threshold.

The Levenberg-Marquardt works very satisfactorily and finds the min-

imum of E s efficiently within a couple of iterations. Our experience

shows a more than one order of magnitude higher speed than the sim-

ple steepest gradient algorithm. The general problem of finding a global