Page 532 -

P. 532

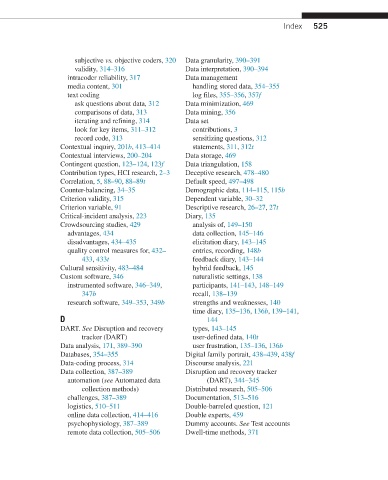

Index 525

subjective vs. objective coders, 320 Data granularity, 390–391

validity, 314–316 Data interpretation, 390–394

intracoder reliability, 317 Data management

media content, 301 handling stored data, 354–355

text coding log files, 355–356, 357f

ask questions about data, 312 Data minimization, 469

comparisons of data, 313 Data mining, 356

iterating and refining, 314 Data set

look for key items, 311–312 contributions, 3

record code, 313 sensitizing questions, 312

Contextual inquiry, 201b, 413–414 statements, 311, 312t

Contextual interviews, 200–204 Data storage, 469

Contingent question, 123–124, 123f Data triangulation, 158

Contribution types, HCI research, 2–3 Deceptive research, 478–480

Correlation, 5, 88–90, 88–89t Default speed, 497–498

Counter-balancing, 34–35 Demographic data, 114–115, 115b

Criterion validity, 315 Dependent variable, 30–32

Criterion variable, 91 Descriptive research, 26–27, 27t

Critical-incident analysis, 223 Diary, 135

Crowdsourcing studies, 429 analysis of, 149–150

advantages, 434 data collection, 145–146

disadvantages, 434–435 elicitation diary, 143–145

quality control measures for, 432– entries, recording, 148b

433, 433t feedback diary, 143–144

Cultural sensitivity, 483–484 hybrid feedback, 145

Custom software, 346 naturalistic settings, 138

instrumented software, 346–349, participants, 141–143, 148–149

347b recall, 138–139

research software, 349–353, 349b strengths and weaknesses, 140

time diary, 135–136, 136b, 139–141,

D 144

DART. See Disruption and recovery types, 143–145

tracker (DART) user-defined data, 140t

Data analysis, 171, 389–390 user frustration, 135–136, 136b

Databases, 354–355 Digital family portrait, 438–439, 438f

Data-coding process, 314 Discourse analysis, 221

Data collection, 387–389 Disruption and recovery tracker

automation (see Automated data (DART), 344–345

collection methods) Distributed research, 505–506

challenges, 387–389 Documentation, 513–516

logistics, 510–511 Double-barreled question, 121

online data collection, 414–416 Double experts, 459

psychophysiology, 387–389 Dummy accounts. See Test accounts

remote data collection, 505–506 Dwell-time methods, 371