Page 1126 - The Mechatronics Handbook

P. 1126

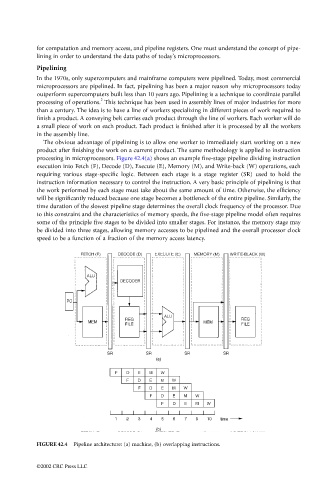

for computation and memory access, and pipeline registers. One must understand the concept of pipe-

lining in order to understand the data paths of today’s microprocessors.

Pipelining

In the 1970s, only supercomputers and mainframe computers were pipelined. Today, most commercial

microprocessors are pipelined. In fact, pipelining has been a major reason why microprocessors today

outperform supercomputers built less than 10 years ago. Pipelining is a technique to coordinate parallel

2

processing of operations. This technique has been used in assembly lines of major industries for more

than a century. The idea is to have a line of workers specializing in different pieces of work required to

finish a product. A conveying belt carries each product through the line of workers. Each worker will do

a small piece of work on each product. Each product is finished after it is processed by all the workers

in the assembly line.

The obvious advantage of pipelining is to allow one worker to immediately start working on a new

product after finishing the work on a current product. The same methodology is applied to instruction

processing in microprocessors. Figure 42.4(a) shows an example five-stage pipeline dividing instruction

execution into Fetch (F), Decode (D), Execute (E), Memory (M), and Write-back (W) operations, each

requiring various stage-specific logic. Between each stage is a stage register (SR) used to hold the

instruction information necessary to control the instruction. A very basic principle of pipelining is that

the work performed by each stage must take about the same amount of time. Otherwise, the efficiency

will be significantly reduced because one stage becomes a bottleneck of the entire pipeline. Similarly, the

time duration of the slowest pipeline stage determines the overall clock frequency of the processor. Due

to this constraint and the characteristics of memory speeds, the five-stage pipeline model often requires

some of the principle five stages to be divided into smaller stages. For instance, the memory stage may

be divided into three stages, allowing memory accesses to be pipelined and the overall processor clock

speed to be a function of a fraction of the memory access latency.

FIGURE 42.4 Pipeline architecture: (a) machine, (b) overlapping instructions.

©2002 CRC Press LLC