Page 179 - Introduction to Autonomous Mobile Robots

P. 179

164

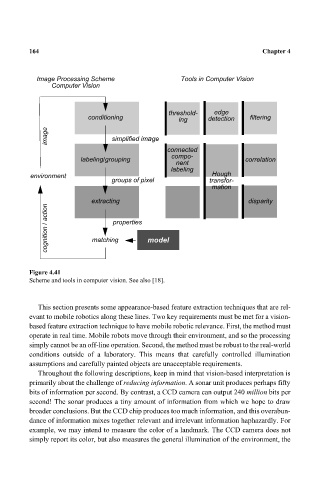

Image Processing Scheme Tools in Computer Vision Chapter 4

Computer Vision

threshold- edge

conditioning ing detection filtering

image simplified image

connected

compo-

labeling/grouping correlation

nent

labeling

environment Hough

groups of pixel transfor-

mation

extracting disparity

cognition / action matching model

properties

Figure 4.41

Scheme and tools in computer vision. See also [18].

This section presents some appearance-based feature extraction techniques that are rel-

evant to mobile robotics along these lines. Two key requirements must be met for a vision-

based feature extraction technique to have mobile robotic relevance. First, the method must

operate in real time. Mobile robots move through their environment, and so the processing

simply cannot be an off-line operation. Second, the method must be robust to the real-world

conditions outside of a laboratory. This means that carefully controlled illumination

assumptions and carefully painted objects are unacceptable requirements.

Throughout the following descriptions, keep in mind that vision-based interpretation is

primarily about the challenge of reducing information. A sonar unit produces perhaps fifty

bits of information per second. By contrast, a CCD camera can output 240 million bits per

second! The sonar produces a tiny amount of information from which we hope to draw

broader conclusions. But the CCD chip produces too much information, and this overabun-

dance of information mixes together relevant and irrelevant information haphazardly. For

example, we may intend to measure the color of a landmark. The CCD camera does not

simply report its color, but also measures the general illumination of the environment, the