Page 135 - Machine Learning for Subsurface Characterization

P. 135

Stacked neural network architecture Chapter 4 111

residuals/errors of the predictions for all the samples in the dataset. RMSE is the

square root of the average squares of residuals/errors of the predictions for all

the samples in the dataset. MAE and RMSE values close to 0 indicate a good

model performance. Compared with MAE, RMSE gives more weightage to

samples where the model has large errors and is more sensitive to the error dis-

tribution and to the variance in errors. Compared with RMSE, MAE is a more

stable metric and facilitates easy interpretation, and each sample affects the

MAE in direct proportion to the absolute error of prediction for that sample.

Large difference between RMSE and MAE indicates large variations in error

distribution. MAE is suitable for uniformly distributed errors. RMSE is pre-

ferred over MAE when the errors follow a normal distribution. Both MAE

and RMSE are not suitable when the errors in model predictions are highly

skewed or biased. The sensitivity of the RMSE and MAE to outliers is the most

common concern with the use of these metrics. MAE uses absolute value that

makes it difficult to calculate the gradient or sensitivity with respect to model

parameter.

A modification of RMSE is the normalized root-mean-square error

(NRMSE) obtained by normalizing the RMSE with target mean, median, devi-

ation, range, or interquartile range. In our study, we normalize the RMSE of

target with the target range, which is the difference between the maximum

and minimum values of the target. NRMSE facilitates comparison of model per-

formances when various models are trained and tested on different datasets.

Compared with RMSE, NRMSE is suitable for the following scenarios:

1. Evaluating model performances on different datasets, for example, compar-

ing performance of SNN model on training dataset with that on testing data-

set or comparing performance of SNN model in Well 1 with that in Well 2

2. Evaluating model performances for different targets/outputs, for example,

comparing performance of SNN model for predicting the four conductivity

logs with that for predicting the four permittivity logs or comparing perfor-

mance of SNN model for predicting conductivity log at one frequency with

that for another frequency

3. Evaluating performances of different models on completely different data-

sets, for example, comparing performances of a log-synthesis model with a

house-price prediction model

4. Evaluating model performances on various transformations of the same

dataset

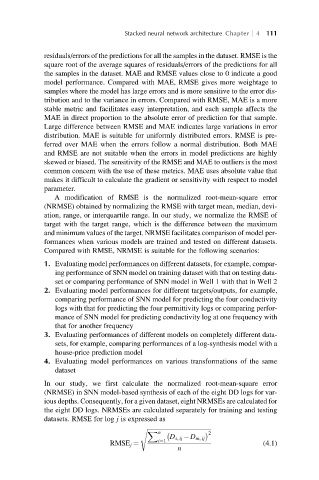

In our study, we first calculate the normalized root-mean-square error

(NRMSE) in SNN model-based synthesis of each of the eight DD logs for var-

ious depths. Consequently, for a given dataset, eight NRMSEs are calculated for

the eight DD logs. NRMSEs are calculated separately for training and testing

datasets. RMSE for log j is expressed as

s ffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffi

2

X

n

D s, ij D m, ij

i¼1

RMSE j ¼ (4.1)

n