Page 818 - Mechanical Engineers' Handbook (Volume 2)

P. 818

8 Reinforcement Learning Control Using NNs 809

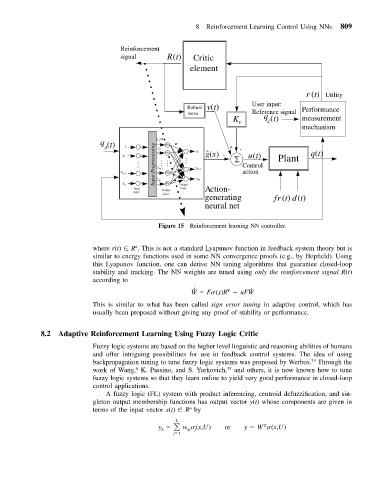

Reinforcement

signal R(t) Critic

element

r (t) Utility

Robust v(t) User input: Performance

term Reference signal

K v q d (t) measurement

mechanism

q (t) z 1 =1 σ () ⋅ W

Input Preprocessing z N-1 y m-1 Control

d 1 z 2 σ () ⋅ y 1 + -

x 1 ˆ g(x) - ∑ u(t) Plant q(t)

x n-1 σ () ⋅ action

x n z N σ () ⋅ Output y m

Input Hidden layer Action-

layer

layer

generating fr (t) d(t)

neural net

Figure 15 Reinforcement learning NN controller.

n

where r(t) R . This is not a standard Lyapunov function in feedback system theory but is

similar to energy functions used in some NN convergence proofs (e.g., by Hopfield). Using

this Lyapunov function, one can derive NN tuning algorithms that guarantee closed-loop

stability and tracking. The NN weights are tuned using only the reinforcement signal R(t)

according to

˙

ˆ

ˆ

T

W F (x)R FW

This is similar to what has been called sign error tuning in adaptive control, which has

usually been proposed without giving any proof of stability or performance.

8.2 Adaptive Reinforcement Learning Using Fuzzy Logic Critic

Fuzzy logic systems are based on the higher level linguistic and reasoning abilities of humans

and offer intriguing possibilities for use in feedback control systems. The idea of using

33

backpropagation tuning to tune fuzzy logic systems was proposed by Werbos. Through the

6

work of Wang, K. Passino, and S. Yurkovich, 35 and others, it is now known how to tune

fuzzy logic systems so that they learn online to yield very good performance in closed-loop

control applications.

A fuzzy logic (FL) system with product inferencing, centroid defuzzification, and sin-

gleton output membership functions has output vector y(t) whose components are given in

n

terms of the input vector x(t) R by

y w (x,U) or y W (x,U)

L

T

k

j 1 kj j