Page 244 - Mechatronics for Safety, Security and Dependability in a New Era

P. 244

Ch46-I044963.fm Page 228 Tuesday, August 1, 2006 3:57 PM

Ch46-I044963.fm

228

228 Page 228 Tuesday, August 1, 2006 3:57 PM

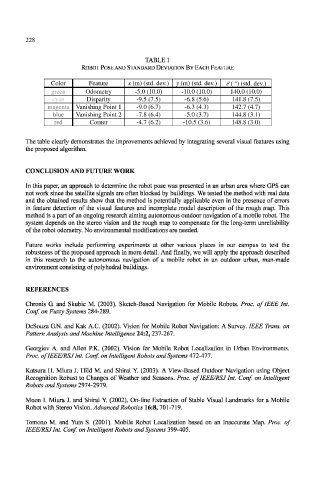

TABLE 1

ROBOT POSE AND STANDARD DEVIATION BY EACH FEATURE

Color

Color Feature x (m) (std. dev.) y (m) (std. dev.) θ ( °) (std. dev.)

y (m) (std. dev.)

x (m) (std. dev.)

Feature

0 ( °) (std. dev.)

-5.0 (10.0)

-10.0 (10.0)

green Odometry -5.0 (10.0) -10.0 (10.0) 140.0 (10.0)

green

Odometry

140.0 (10.0)

Disparity

141.8 (7.5)

cyan Disparity -9.5 (7.5) -6.8 (5.6) 141.8 (7.5)

cyan

-6.8 (5.6)

-9.5 (7.5)

magenta Vanishing Point 1 -9.0 (6.7) -6.3 (4.3) 142.7 (4.7)

-6.3 (4.3)

142.7 (4.7)

magenta

-9.0 (6.7)

Vanishing Point 1

144.8(3.1)

-7.8 (6.4)

Vanishing Point 2

blue Vanishing Point 2 -7.8 (6.4) -5.0 (3.7) 144.8 (3.1)

blue

-5.0 (3.7)

red

red Corner -4.7 (6.2) -10.5 (3.6) 148.8 (3.0)

Corner

148.8 (3.0)

-10.5 (3.6)

-4.7 (6.2)

The table clearly demonstrates the improvements achieved by integrating several visual features using

the proposed algorithm.

CONCLUSION AND FUTURE WORK

In this paper, an approach to determine the robot pose was presented in an urban area where GPS can

not work since the satellite signals are often blocked by buildings. We tested the method with real data

and the obtained results show that the method is potentially applicable even in the presence of errors

in feature detection of the visual features and incomplete model description of the rough map. This

method is a part of an ongoing research aiming autonomous outdoor navigation of a mobile robot. The

system depends on the stereo vision and the rough map to compensate for the long-term unreliability

of the robot odometry. No environmental modifications are needed.

Future works include performing experiments at other various places in our campus to test the

robustness of the proposed approach in more detail. And finally, we will apply the approach described

in this research to the autonomous navigation of a mobile robot in an outdoor urban, man-made

environment consisting of polyhedral buildings.

REFERENCES

Chronis G. and Skubic M. (2003). Sketch-Based Navigation for Mobile Robots. Proc. of IEEE Int.

Conf. on Fuzzy Systems 284-289.

DeSouza G.N. and Kak A.C. (2002). Vision for Mobile Robot Navigation: A Survey. IEEE Trans, on

Pattern Analysis and Machine Intelligence 24:2, 237-267.

Georgiev A. and Allen P.K. (2002). Vision for Mobile Robot Localization in Urban Environments.

Proc. of IEEE/RSJ Int. Conf. on Intelligent Robots and Systems 472-477'.

Katsura H. Miura J. Hild M. and Shirai Y. (2003). A View-Based Outdoor Navigation using Object

Recognition Robust to Changes of Weather and Seasons. Proc. of IEEE/RSJ Int. Conf. on Intelligent

Robots and Systems 2974-2979.

Moon I. Miura J. and Shirai Y. (2002). On-line Extraction of Stable Visual Landmarks for a Mobile

Robot with Stereo Vision. Advanced Robotics 16:8, 701-719.

Tomono M. and Yuta S. (2001). Mobile Robot Localization based on an Inaccurate Map. Proc. of

IEEE/RSJ Int. Conf on Intelligent Robots and Systems 399-405.