Page 57 - Neural Network Modeling and Identification of Dynamical Systems

P. 57

2.1 ARTIFICIAL NEURAL NETWORK STRUCTURES 45

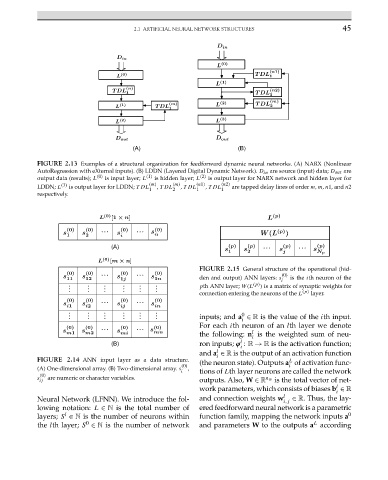

FIGURE 2.13 Examples of a structural organization for feedforward dynamic neural networks. (A) NARX (Nonlinear

AutoRegression with eXternal inputs). (B) LDDN (Layered Digital Dynamic Network). D in aresource(input) data; D out are

output data (results); L (0) is input layer; L (1) is hidden layer; L (2) is output layer for NARX network and hidden layer for

LDDN; L (3) is output layer for LDDN; TDL (m) , TDL (m) , TDL (n1) , TDL (n2) are tapped delay lines of order m, m, n1,and n2

1 2 1 1

respectively.

FIGURE 2.15 General structure of the operational (hid-

(0)

den and output) ANN layers: s is the ith neuron of the

i

pth ANN layer; W(L (p) ) is a matrix of synaptic weights for

connection entering the neurons of the L (p) layer.

0

inputs; and a ∈ R is the value of the ith input.

i

For each ith neuron of an lth layer we denote

l

the following: n is the weighted sum of neu-

i

l

ron inputs; ϕ : R → R is the activation function;

i

l

and a ∈ R is the output of an activation function

i

FIGURE 2.14 ANN input layer as a data structure. (the neuron state). Outputs a of activation func-

L

(0) i

(A) One-dimensional array. (B) Two-dimensional array. s ,

i tions of Lth layer neurons are called the network

(0)

s are numeric or character variables.

ij outputs. Also, W ∈ R n w is the total vector of net-

l

work parameters, which consists of biases b ∈ R

i

Neural Network (LFNN). We introduce the fol- and connection weights w l i,j ∈ R. Thus, the lay-

lowing notation: L ∈ N is the total number of ered feedforward neural network is a parametric

l

layers; S ∈ N is the number of neurons within function family, mapping the network inputs a 0

L

0

the lth layer; S ∈ N is the number of network and parameters W to the outputs a according