Page 239 - Numerical Methods for Chemical Engineering

P. 239

228 5 Numerical optimization

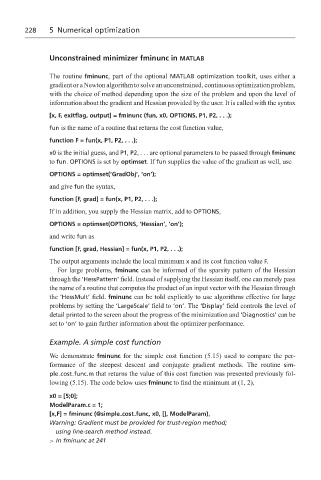

Unconstrained minimizer fminunc in MATLAB

The routine fminunc, part of the optional MATLAB optimization toolkit, uses either a

gradient or a Newton algorithm to solve an unconstrained, continuous optimization problem,

with the choice of method depending upon the size of the problem and upon the level of

information about the gradient and Hessian provided by the user. It is called with the syntax

[x, F, exitflag, output] = fminunc (fun, x0, OPTIONS, P1, P2,...);

fun is the name of a routine that returns the cost function value,

function F = fun(x, P1, P2,...);

x0 is the initial guess, and P1, P2, . . . are optional parameters to be passed through fminunc

to fun. OPTIONS is set by optimset.If fun supplies the value of the gradient as well, use

OPTIONS = optimset(‘GradObj’, ‘on’);

and give fun the syntax,

function [F, grad] = fun(x, P1, P2,...);

If in addition, you supply the Hessian matrix, add to OPTIONS,

OPTIONS = optimset(OPTIONS, ‘Hessian’, ‘on’);

and write fun as

function [F, grad, Hessian] = fun(x, P1, P2,...);

The output arguments include the local minimum x and its cost function value F.

For large problems, fminunc can be informed of the sparsity pattern of the Hessian

through the ‘HessPattern’ field. Instead of supplying the Hessian itself, one can merely pass

the name of a routine that computes the product of an input vector with the Hessian through

the ‘HessMult’ field. fminunc can be told explicitly to use algorithms effective for large

problems by setting the ‘LargeScale’ field to ‘on’. The ‘Display’ field controls the level of

detail printed to the screen about the progress of the minimization and ‘Diagnostics’ can be

set to ‘on’ to gain further information about the optimizer performance.

Example. A simple cost function

We demonstrate fminunc for the simple cost function (5.15) used to compare the per-

formance of the steepest descent and conjugate gradient methods. The routine sim-

ple cost func.m that returns the value of this cost function was presented previously fol-

lowing (5.15). The code below uses fminunc to find the minimum at (1, 2),

x0 = [5;0];

ModelParam.c = 1;

[x,F] = fminunc (@simple cost func, x0, [], ModelParam),

Warning: Gradient must be provided for trust-region method;

using line-search method instead.

> In fminunc at 241