Page 27 - Artificial Intelligence in the Age of Neural Networks and Brain Computing

P. 27

14 CHAPTER 1 Nature’s Learning Rule: The Hebbian-LMS Algorithm

range, or it could remain saturated. Saturation would not necessarily be permanent

(as would occur with Hebb’s original learning rule).

The neuron and its synapses in Fig. 1.9 are identical to those of Fig. 1.7, except

that the final output is obtained from a “half sigmoid.” So the output will be positive,

the weights will be positive, and some of the weighted inputs will be excitatory,

some inhibitory, equivalent to positive or negative inputs. The (SUM) could be

negative or positive.

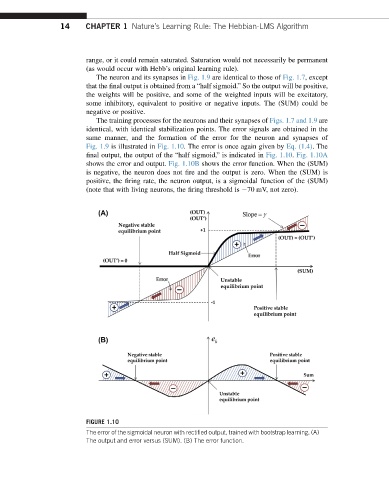

The training processes for the neurons and their synapses of Figs. 1.7 and 1.9 are

identical, with identical stabilization points. The error signals are obtained in the

same manner, and the formation of the error for the neuron and synapses of

Fig. 1.9 is illustrated in Fig. 1.10. The error is once again given by Eq. (1.4). The

final output, the output of the “half sigmoid,” is indicated in Fig. 1.10. Fig. 1.10A

shows the error and output. Fig. 1.10B shows the error function. When the (SUM)

is negative, the neuron does not fire and the output is zero. When the (SUM) is

positive, the firing rate, the neuron output, is a sigmoidal function of the (SUM)

(note that with living neurons, the firing threshold is 70 mV, not zero).

FIGURE 1.10

The error of the sigmoidal neuron with rectified output, trained with bootstrap learning. (A)

The output and error versus (SUM). (B) The error function.