Page 158 -

P. 158

4.3 / ELEMENTS OF CACHE DESIGN 129

s+w

Cache Main memory

Memory address Tag Data W0

Tag Word W1

W2 B 0

L

s 0 W3

w

w L

j

s

W4j

Compare w W(4j+1) B

W(4j+2) j

W(4j+3)

(Hit in cache)

1 if match

0 if no match s

L m–1

0 if match

1 if no match

(Miss in cache)

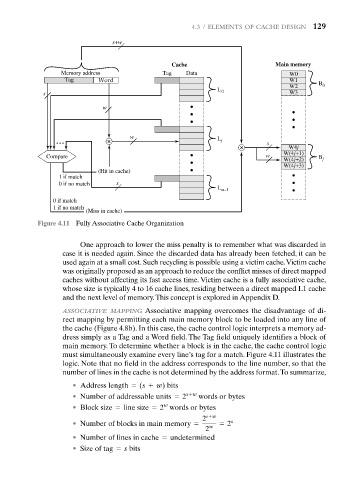

Figure 4.11 Fully Associative Cache Organization

One approach to lower the miss penalty is to remember what was discarded in

case it is needed again. Since the discarded data has already been fetched, it can be

used again at a small cost. Such recycling is possible using a victim cache.Victim cache

was originally proposed as an approach to reduce the conflict misses of direct mapped

caches without affecting its fast access time. Victim cache is a fully associative cache,

whose size is typically 4 to 16 cache lines, residing between a direct mapped L1 cache

and the next level of memory.This concept is explored in Appendix D.

ASSOCIATIVE MAPPING Associative mapping overcomes the disadvantage of di-

rect mapping by permitting each main memory block to be loaded into any line of

the cache (Figure 4.8b). In this case, the cache control logic interprets a memory ad-

dress simply as a Tag and a Word field. The Tag field uniquely identifies a block of

main memory. To determine whether a block is in the cache, the cache control logic

must simultaneously examine every line’s tag for a match. Figure 4.11 illustrates the

logic. Note that no field in the address corresponds to the line number, so that the

number of lines in the cache is not determined by the address format.To summarize,

• Address length = (s + w) bits

• Number of addressable units = 2 s w words or bytes

• Block size = line size = 2 words or bytes

w

2 s+w

• Number of blocks in main memory = = 2 s

2 w

• Number of lines in cache = undetermined

• Size of tag = s bits