Page 1133 - The Mechatronics Handbook

P. 1133

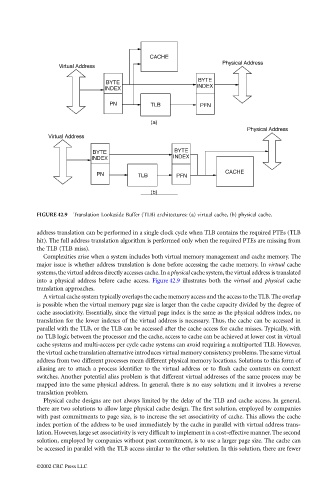

FIGURE 42.9 Translation Lookaside Buffer (TLB) architectures: (a) virtual cache, (b) physical cache.

address translation can be performed in a single clock cycle when TLB contains the required PTEs (TLB

hit). The full address translation algorithm is performed only when the required PTEs are missing from

the TLB (TLB miss).

Complexities arise when a system includes both virtual memory management and cache memory. The

major issue is whether address translation is done before accessing the cache memory. In virtual cache

systems, the virtual address directly accesses cache. In a physical cache system, the virtual address is translated

into a physical address before cache access. Figure 42.9 illustrates both the virtual and physical cache

translation approaches.

A virtual cache system typically overlaps the cache memory access and the access to the TLB. The overlap

is possible when the virtual memory page size is larger than the cache capacity divided by the degree of

cache associativity. Essentially, since the virtual page index is the same as the physical address index, no

translation for the lower indexes of the virtual address is necessary. Thus, the cache can be accessed in

parallel with the TLB, or the TLB can be accessed after the cache access for cache misses. Typically, with

no TLB logic between the processor and the cache, access to cache can be achieved at lower cost in virtual

cache systems and multi-access per cycle cache systems can avoid requiring a multiported TLB. However,

the virtual cache translation alternative introduces virtual memory consistency problems. The same virtual

address from two different processes mean different physical memory locations. Solutions to this form of

aliasing are to attach a process identifier to the virtual address or to flush cache contents on context

switches. Another potential alias problem is that different virtual addresses of the same process may be

mapped into the same physical address. In general, there is no easy solution; and it involves a reverse

translation problem.

Physical cache designs are not always limited by the delay of the TLB and cache access. In general,

there are two solutions to allow large physical cache design. The first solution, employed by companies

with past commitments to page size, is to increase the set associativity of cache. This allows the cache

index portion of the address to be used immediately by the cache in parallel with virtual address trans-

lation. However, large set associativity is very difficult to implement in a cost-effective manner. The second

solution, employed by companies without past commitment, is to use a larger page size. The cache can

be accessed in parallel with the TLB access similar to the other solution. In this solution, there are fewer

©2002 CRC Press LLC