Page 184 - Neural Network Modeling and Identification of Dynamical Systems

P. 184

5.2 SEMIEMPIRICAL ANN-BASED MODEL DESIGN PROCESS 175

The initial guess for the parameter w value is

taken to be w (0) = 8.32. Training is performed

using the Levenberg–Marquardt optimization

method. The Jacobian of the error function is

computed using the RTRL algorithm. Tuning

the parameter w this way provides a minor im-

provement to the model accuracy; the prediction

error has decreased from 0.13947 to 0.13593 for

the model discretized by the Euler method and

from 0.07143 to 0.07104 for the model discretized

by the Adams–Bashforth method.

Based on these results, we can conclude that

it is impossible to achieve the required model

accuracy by keeping the original model struc-

ture and tuning values of its parameters; hence

we need to modify the model structure itself.

The exact form of these structural modifications

is suggested by the abovementioned hypothe-

ses concerning the possible reasons for the un-

satisfactory accuracy of the model. Structural

modification is performed on a modular ba-

sis: only specific parts of the model are sub-

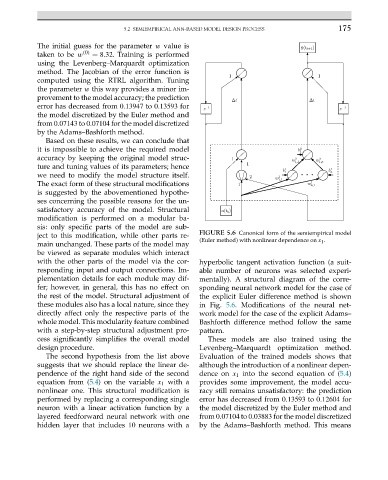

ject to this modification, while other parts re- FIGURE 5.6 Canonical form of the semiempirical model

main unchanged. These parts of the model may (Euler method) with nonlinear dependence on x 1 .

be viewed as separate modules which interact

with the other parts of the model via the cor- hyperbolic tangent activation function (a suit-

responding input and output connections. Im- able number of neurons was selected experi-

plementation details for each module may dif- mentally). A structural diagram of the corre-

fer; however, in general, this has no effect on sponding neural network model for the case of

the rest of the model. Structural adjustment of the explicit Euler difference method is shown

these modules also has a local nature, since they in Fig. 5.6. Modifications of the neural net-

directly affect only the respective parts of the work model for the case of the explicit Adams–

whole model. This modularity feature combined Bashforth difference method follow the same

with a step-by-step structural adjustment pro- pattern.

cess significantly simplifies the overall model These models are also trained using the

design procedure. Levenberg–Marquardt optimization method.

The second hypothesis from the list above Evaluation of the trained models shows that

suggests that we should replace the linear de- although the introduction of a nonlinear depen-

pendence of the right hand side of the second dence on x 1 into the second equation of (5.4)

equation from (5.4) on the variable x 1 with a provides some improvement, the model accu-

nonlinear one. This structural modification is racy still remains unsatisfactory: the prediction

performed by replacing a corresponding single error has decreased from 0.13593 to 0.12604 for

neuron with a linear activation function by a the model discretized by the Euler method and

layered feedforward neural network with one from 0.07104 to 0.03883 for the model discretized

hidden layer that includes 10 neurons with a by the Adams–Bashforth method. This means